Capturing movements with machine learning. A four-day workshop at Burg Giebichenstein University of Art and Design.

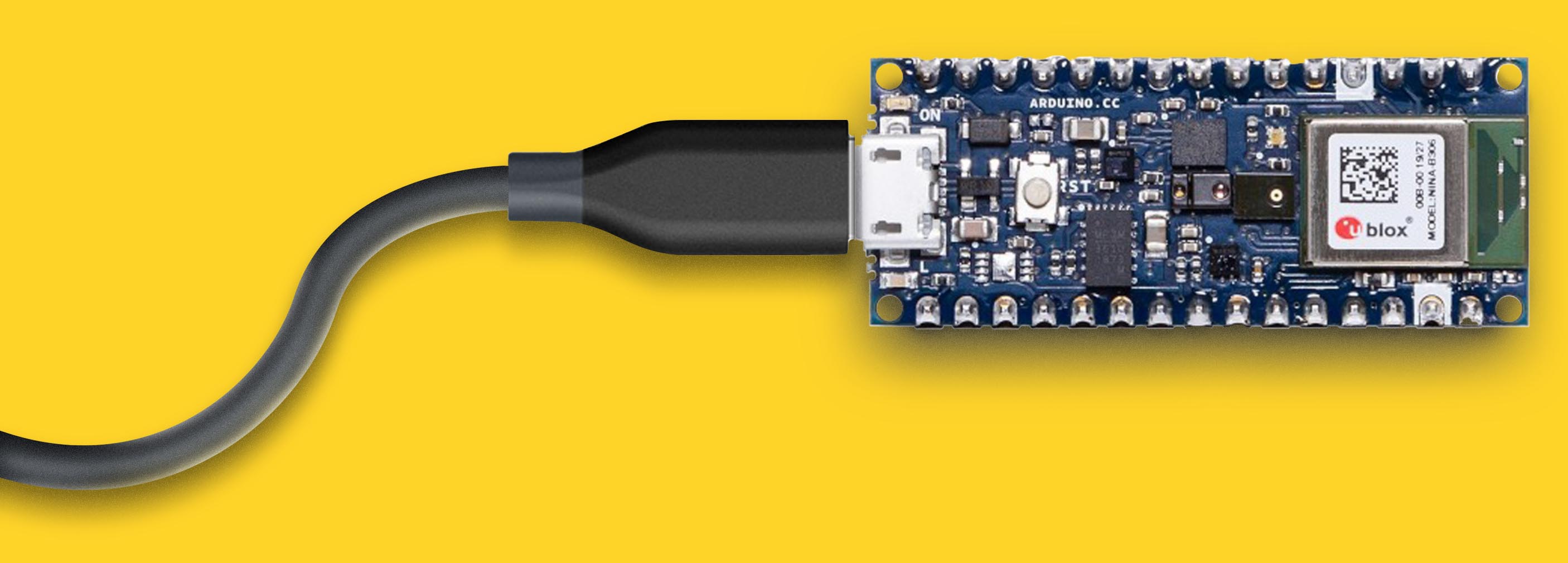

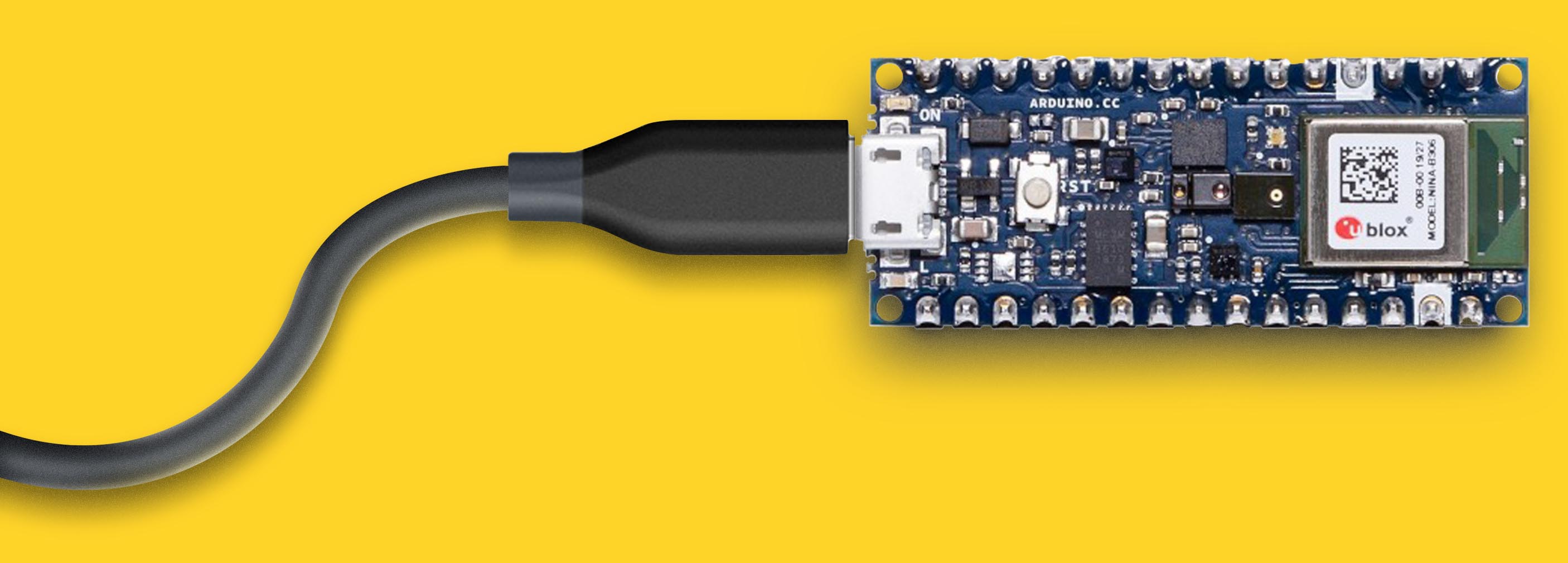

Inspired by the magic wand tutorial by Sandeep Mistry and Don Coleman, we spent some time to further expand the functionality of the simple neural network that came with it. A set of movements is collected through the IMU sensors on the Arduino and then used to train a model, which is small enough to be deployed to the Arduino after being trained in a Python program. After some time of testing different algorithms for different sequence lengths, we took the enhanced neural networks on our workshop to Burg Halle to let the students experiment with them.

In a 4-day workshop a group of art and design students learned about the basics mechanics of machine learning and then went on to training their own neural nets on different sets of movement data. The main objective for the students was to experiment with the idea of learning and customizable products.

By using a set of different models we provided through a Google Colab, they could experiment how different parameters influence the performance and behavior of the models.

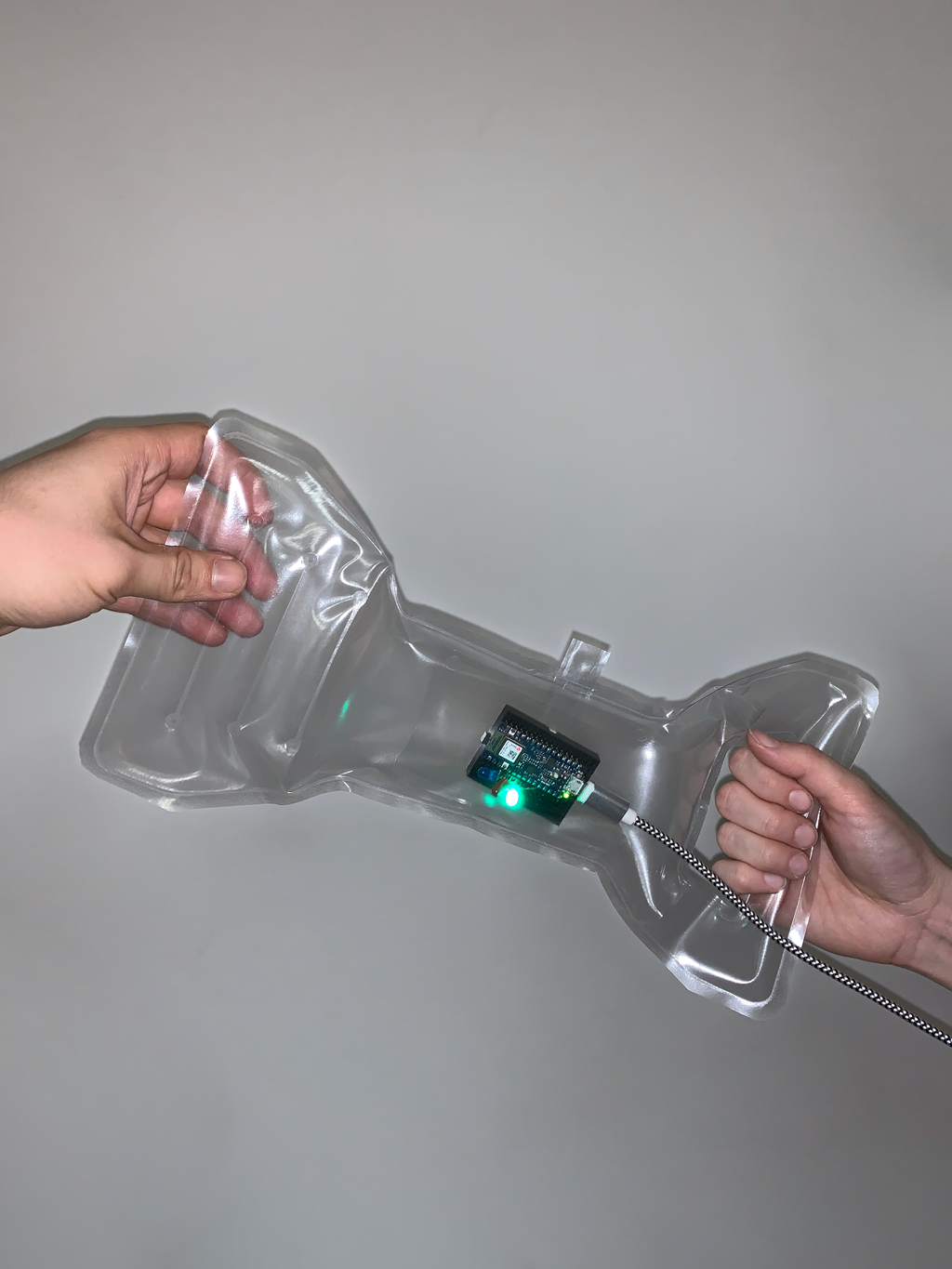

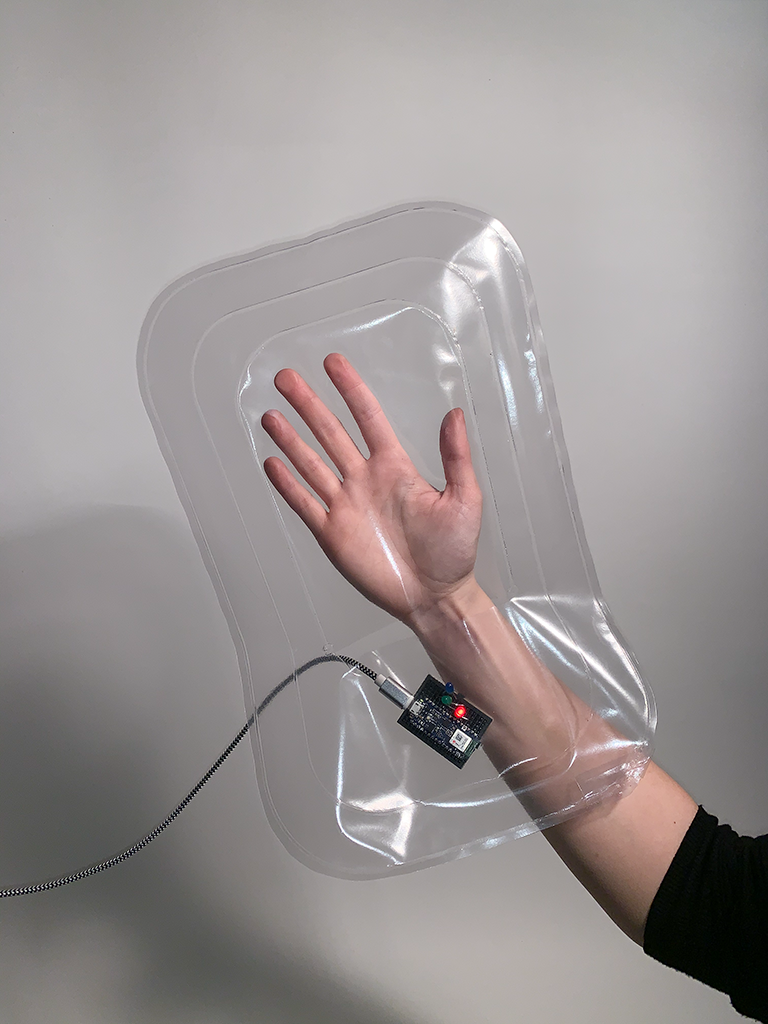

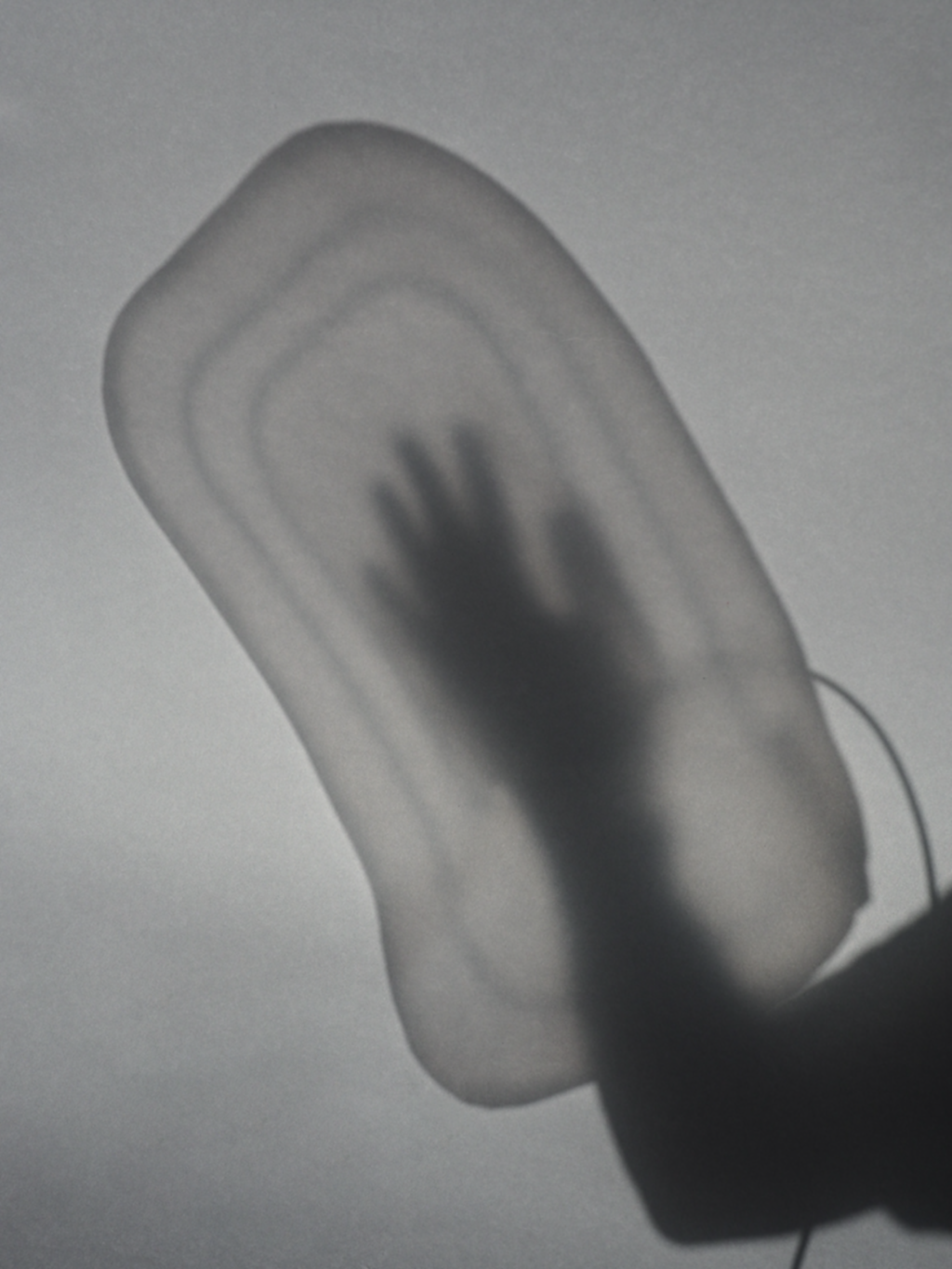

Jasmin Schauer

Marie Gehrhardt

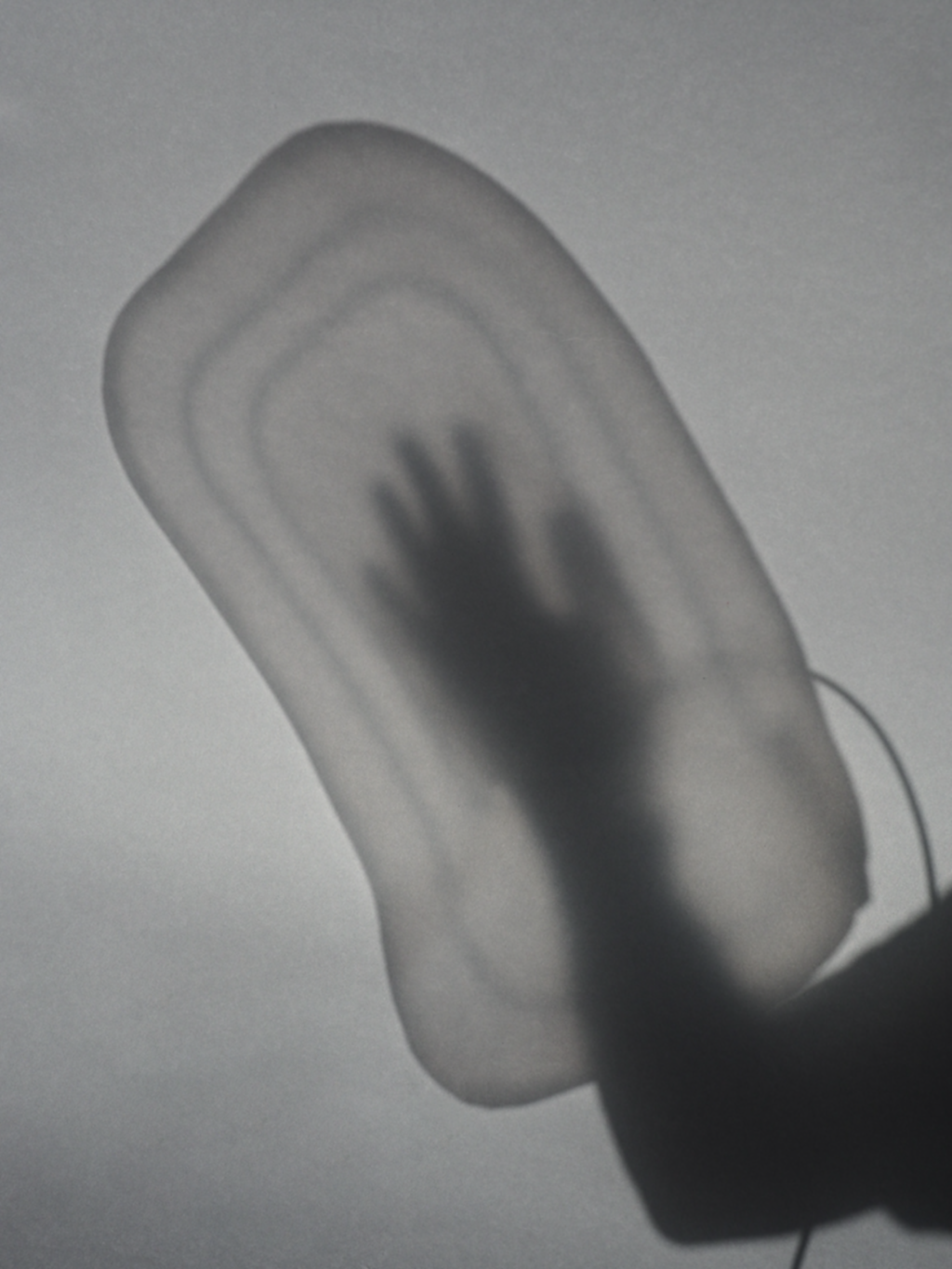

Jasmin and Marie created three different objects that formulate a riddle which has to be solved by two participants. The objects imply three different gestures of affection by their design – hugging, high-fiving, and giving the object to each other. When the participants solve the riddle by performing the gestures, the machine learning model on the Arduino responds with visual confirmation by flashing a colored LED.

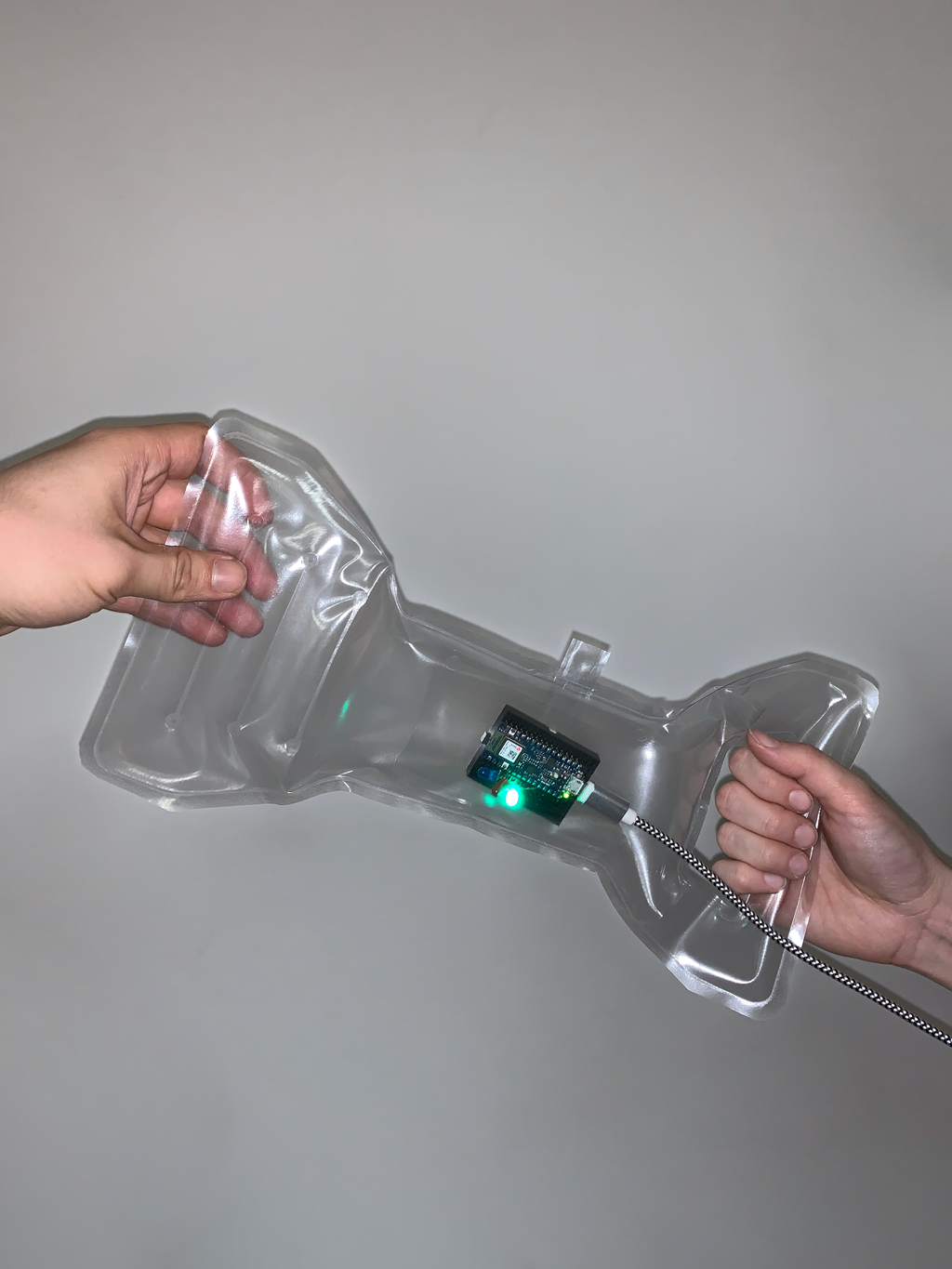

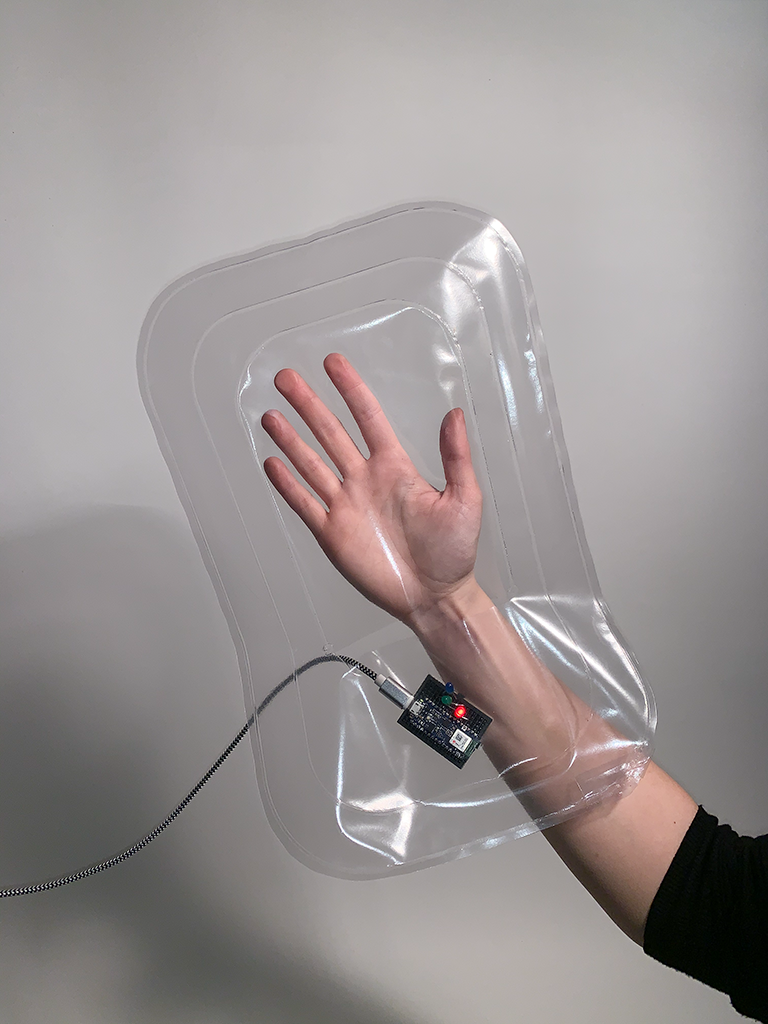

So Jin Park

Christina Klus

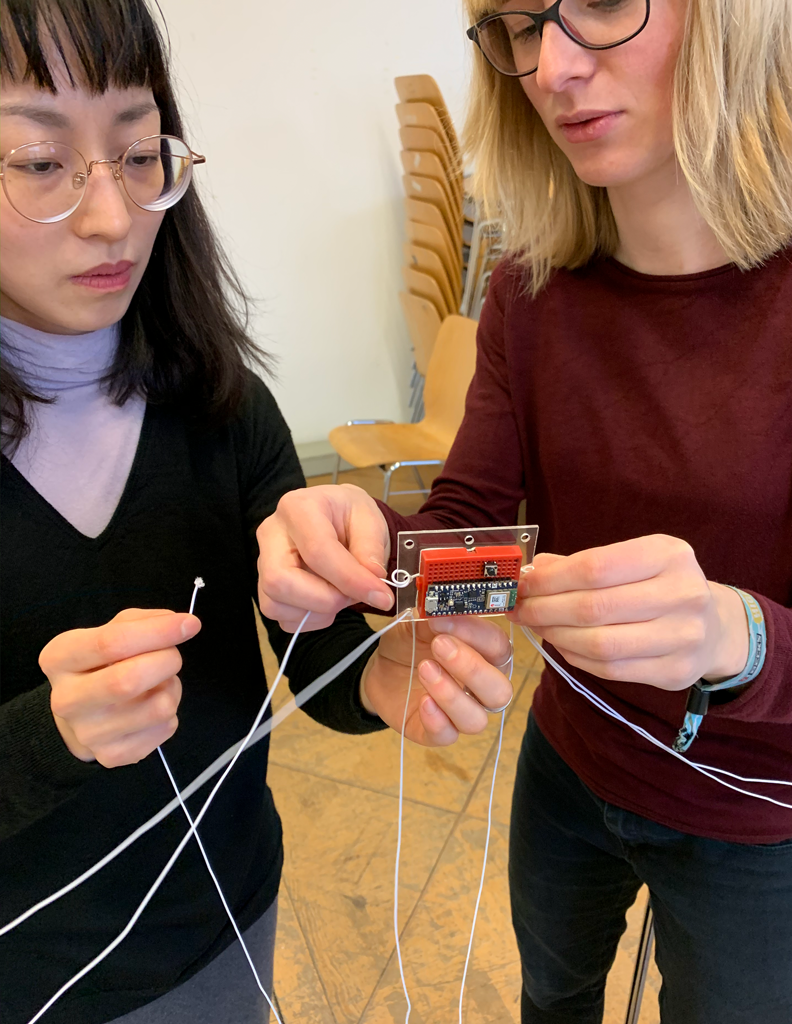

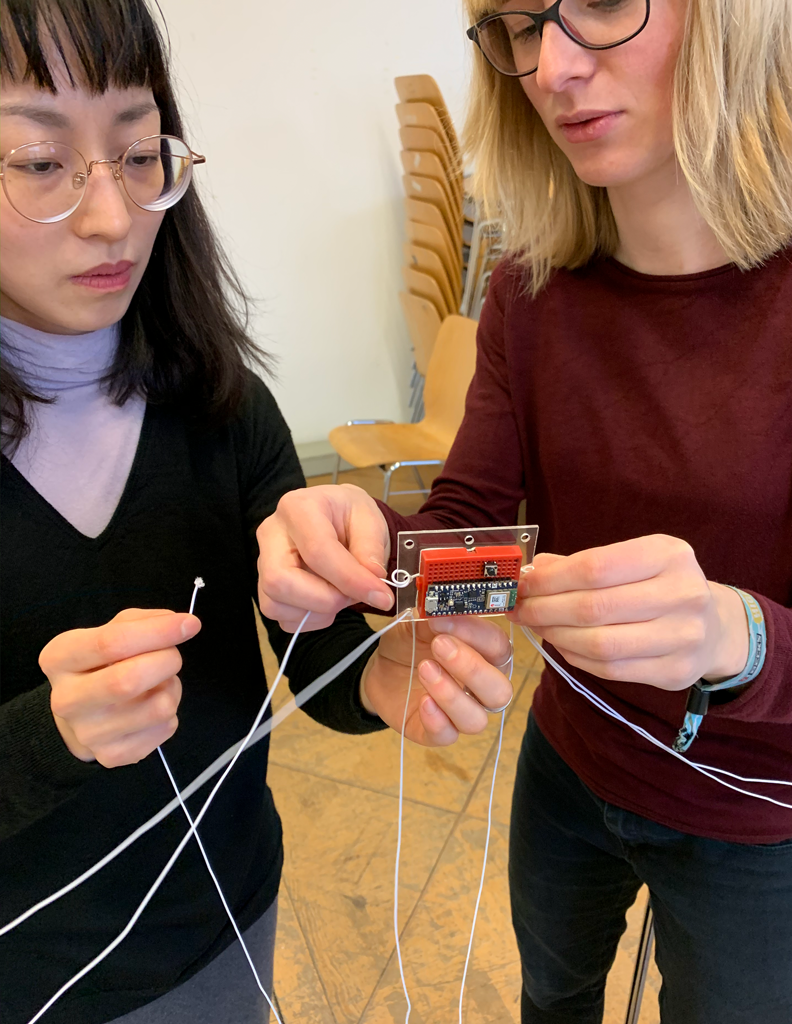

Christina and So Jin explored the scenario of using on-device machine learning to create custom controllers. They concentrated on a specific type of user input by tieing the device on a rubber band to create a playful interaction and asserting different directions of movement by limiting the devices' degrees of freedom.

Michael Goß

Henrik Spaan

Henrik and Michl used machine learning to implement a very effective technique in dog training. Clicker training remaps the dog's expectation of receiving a reward by substituting an actual reward with a clicking-sound. The model they trained with very few samples was very impressive, as it performed extremely well, even with comparably short movements and a real dog – Nacho.

Yang Ni

Yang Ni trained a machine learning model to count forehands and backhands during a game of ping-pong. With machine learning models being easily trainable and deployable, it becomes possible for the user to quantify custom movements, as it is already widely common with standardized sequences like steps or swimming laps.

Jonas Otto

Like So-Jin and Christina, Jonas experimented on a new type of controller, that the designer can train to detect different types of motion. By cushioning the controller with memory foam he played with the idea of creating new types of interactions like throwing the controller away, on the floor, or against a wall.

Fridolin Richter

Fridolin trained a model to predict the next direction the dancing lead is going to take with his or her next step. He used a very short sequence to pass only the initial movement of the foot to the model, which then could light up the LEDs on his head to indicate if he was going to turn left or right to his dancing partner.

Lucas Kurz

Save the date – Lucas also chose to attach the board to his foot, so it wouldn't be noticed by his dates. He trained a model to detect a set of secret gestures he could perform with his feet. The Arduino would then send a message to a friend to rescue him if the date wouldn't go well.

The students learned how to collect and handle their own data, compare clean and reformat it to plug it into a machine learning model. They also learned about the basic structure of developing machine learning algorithms and their deployment on embedded devices with Tensorflow for microcomputers.

To train the board to classify different movement sequences, the onboard IMU-sensors of the Arduino Nano 33 BLE where used. Afterwards, the captured data was visualized in Python.

On a higher level, the students were introduced to a possible new paradigm in product design and art production that enables users, participants and visitors to interact with products and objects in new ways or even retrain them to adapt them to new usage scenarios or retrain them to increase their performance in the desired way.

As with all our workshops, we emphasized a hands-on approach. The students all developed working prototypes using the hardware we supplied within the four days.

Contextualizing Technology

office@same.vision

+49 30 2885 2595

Cotheniusstr. 6

D-10407 Berlin

Capturing movements with machine learning. A four-day workshop at Burg Giebichenstein University of Art and Design.

Inspired by the magic wand tutorial by Sandeep Mistry and Don Coleman, we spent some time to further expand the functionality of the simple neural network that came with it. A set of movements is collected through the IMU sensors on the Arduino and then used to train a model, which is small enough to be deployed to the Arduino after being trained in a Python program. After some time of testing different algorithms for different sequence lengths, we took the enhanced neural networks on our workshop to Burg Halle to let the students experiment with them.

In a 4-day workshop a group of art and design students learned about the basics mechanics of machine learning and then went on to training their own neural nets on different sets of movement data. The main objective for the students was to experiment with the idea of learning and customizable products.

By using a set of different models we provided through a Google Colab, they could experiment how different parameters influence the performance and behavior of the models.

Jasmin Schauer

Marie Gehrhardt

Jasmin and Marie created three different objects that formulate a riddle which has to be solved by two participants. The objects imply three different gestures of affection by their design – hugging, high-fiving, and giving the object to each other. When the participants solve the riddle by performing the gestures, the machine learning model on the Arduino responds with visual confirmation by flashing a colored LED.

So Jin Park

Christina Klus

Christina and So Jin explored the scenario of using on-device machine learning to create custom controllers. They concentrated on a specific type of user input by tieing the device on a rubber band to create a playful interaction and asserting different directions of movement by limiting the devices' degrees of freedom.

Michael Goß

Henrik Spaan

Henrik and Michl used machine learning to implement a very effective technique in dog training. Clicker training remaps the dog's expectation of receiving a reward by substituting an actual reward with a clicking-sound. The model they trained with very few samples was very impressive, as it performed extremely well, even with comparably short movements and a real dog – Nacho.

Yang Ni

Yang Ni trained a machine learning model to count forehands and backhands during a game of ping-pong. With machine learning models being easily trainable and deployable, it becomes possible for the user to quantify custom movements, as it is already widely common with standardized sequences like steps or swimming laps.

Jonas Otto

Like So-Jin and Christina, Jonas experimented on a new type of controller, that the designer can train to detect different types of motion. By cushioning the controller with memory foam he played with the idea of creating new types of interactions like throwing the controller away, on the floor, or against a wall.

Fridolin Richter

Fridolin trained a model to predict the next direction the dancing lead is going to take with his or her next step. He used a very short sequence to pass only the initial movement of the foot to the model, which then could light up the LEDs on his head to indicate if he was going to turn left or right to his dancing partner.

Lucas Kurz

Save the date – Lucas also chose to attach the board to his foot, so it wouldn't be noticed by his dates. He trained a model to detect a set of secret gestures he could perform with his feet. The Arduino would then send a message to a friend to rescue him if the date wouldn't go well.

The students learned how to collect and handle their own data, compare clean and reformat it to plug it into a machine learning model. They also learned about the basic structure of developing machine learning algorithms and their deployment on embedded devices with Tensorflow for microcomputers.

To train the board to classify different movement sequences, the onboard IMU-sensors of the Arduino Nano 33 BLE where used. Afterwards, the captured data was visualized in Python.

On a higher level, the students were introduced to a possible new paradigm in product design and art production that enables users, participants and visitors to interact with products and objects in new ways or even retrain them to adapt them to new usage scenarios or retrain them to increase their performance in the desired way.

As with all our workshops, we emphasized a hands-on approach. The students all developed working prototypes using the hardware we supplied within the four days.

Contextualizing Technology

office@same.vision

+49 30 2885 2595

Cotheniusstr. 6

D-10407 Berlin